Creating Character Art with AI: My Quest for Consistency

Creating Character Art with AI: My Quest for Consistency

One of the most exciting (and sometimes frustrating!) challenges in AI image generation is creating compelling and consistent character art. It’s one thing to generate a stunning standalone portrait, but what if you want to see that same character in different poses, outfits, or scenarios? This is where I’ve been spending a lot of my creative energy lately, and I want to share some of the techniques and workflows I’ve found helpful.

Getting true “one-shot” consistency with a character across multiple images is still a holy grail for many AI tools without advanced techniques like training custom models (LoRAs) or heavy photobashing. However, with smart prompting and an iterative approach, we can get surprisingly close and, at the very least, create a series of images that feel like they belong to the same character concept.

The Core Challenge: AI’s “Amnesia”

The main hurdle is that most general-purpose AI image generators don’t inherently “remember” a character from one generation to the next. Each prompt is, in essence, a new request. So, if you generate a “brave knight with a scar over his left eye” and then ask for “the same knight fighting a dragon,” the AI might generate a brave knight, but the scar could be missing, his armor different, or even his facial features subtly (or drastically) changed.

So, how do we combat this “amnesia” and guide the AI towards a more consistent vision?

My Strategy: Iteration, Specificity, and a Bit of Luck

My approach isn’t a magic bullet, but a combination of strategies:

1. Detailed Initial Character Conception (The “Seed” Prompt)

Everything starts with a strong foundational concept for your character. I spend time thinking about:

- Key Visual Identifiers: What are 2-3 absolutely unmissable features? E.g., “fiery red hair in a short bob,” “a distinct silver amulet,” “piercing green eyes,” “a specific facial tattoo.”

- Overall Archetype/Style: “Cyberpunk street samurai,” “ethereal forest elf,” “gritty steampunk inventor.”

- Personality/Expression (Optional but helpful): “Stoic and determined,” “mischievous grin,” “weary but resolute.”

I then craft a detailed “seed prompt” that incorporates these elements.

Example Seed Prompt:

Portrait of Elara, a young female space pirate, fiery red hair in a messy short bob, piercing emerald green eyes, a small silver hoop earring in her left ear, mischievous smirk, wearing a worn leather jacket over a dark t-shirt, cyberpunk neon-lit alley background, cinematic lighting, detailed, concept art style.

(Imagine an AI-generated portrait based on the prompt above for “Elara”.)

(Imagine an AI-generated portrait based on the prompt above for “Elara”.)

2. Locking Down the “Look” with Iteration and Variations

Once I get an initial generation that I really like from the seed prompt (this might take several re-rolls or slight prompt tweaks!), I try to “lock in” that specific look.

- Using Seeds (if your tool supports it): In tools like Stable Diffusion, you can reuse the seed of a successful generation. This tells the AI to start from a similar random noise pattern, which can help maintain consistency if you only make minor changes to the prompt.

- Midjourney’s Vary (Subtle) & Vary (Strong): If I get a good base in Midjourney, I use the

Vbuttons.Vary (Subtle)is often my first choice to get slight variations that might refine features without losing the core likeness.Vary (Strong)can be useful for more significant pose or outfit changes, but you risk losing consistency. - Referencing the Image (Image-to-Image or “Remix Mode”):

- Img2Img (Stable Diffusion): I’ll take the best initial generation and use it as an input for img2img, providing a new prompt for a different pose or scene but keeping the character description elements. The “denoising strength” parameter is crucial here – lower values stick closer to the input image, higher values allow more change.

- Midjourney’s “Remix Mode” with Image Prompts: I enable Remix mode (

/prefer remix) and then, when varying an image, I can edit the prompt. I often keep the original detailed character description and add the new action or setting. Sometimes, I’ll even include the URL of the best “base” image as an image prompt at the beginning of the new text prompt to further guide the AI.

3. Consistent Phrasing and Negative Prompts

When creating subsequent images, I try to reuse the exact same phrasing for the core character descriptors from my seed prompt.

- Character Descriptor Block: I’ll copy-paste “fiery red hair in a messy short bob, piercing emerald green eyes, a small silver hoop earring in her left ear” into every new prompt for Elara.

- Negative Prompts for Inconsistency: If I notice the AI keeps changing something specific (e.g., hair color, eye color), I might add negative prompts like

blue hair, brown eyes, long hairto steer it away from unwanted variations.

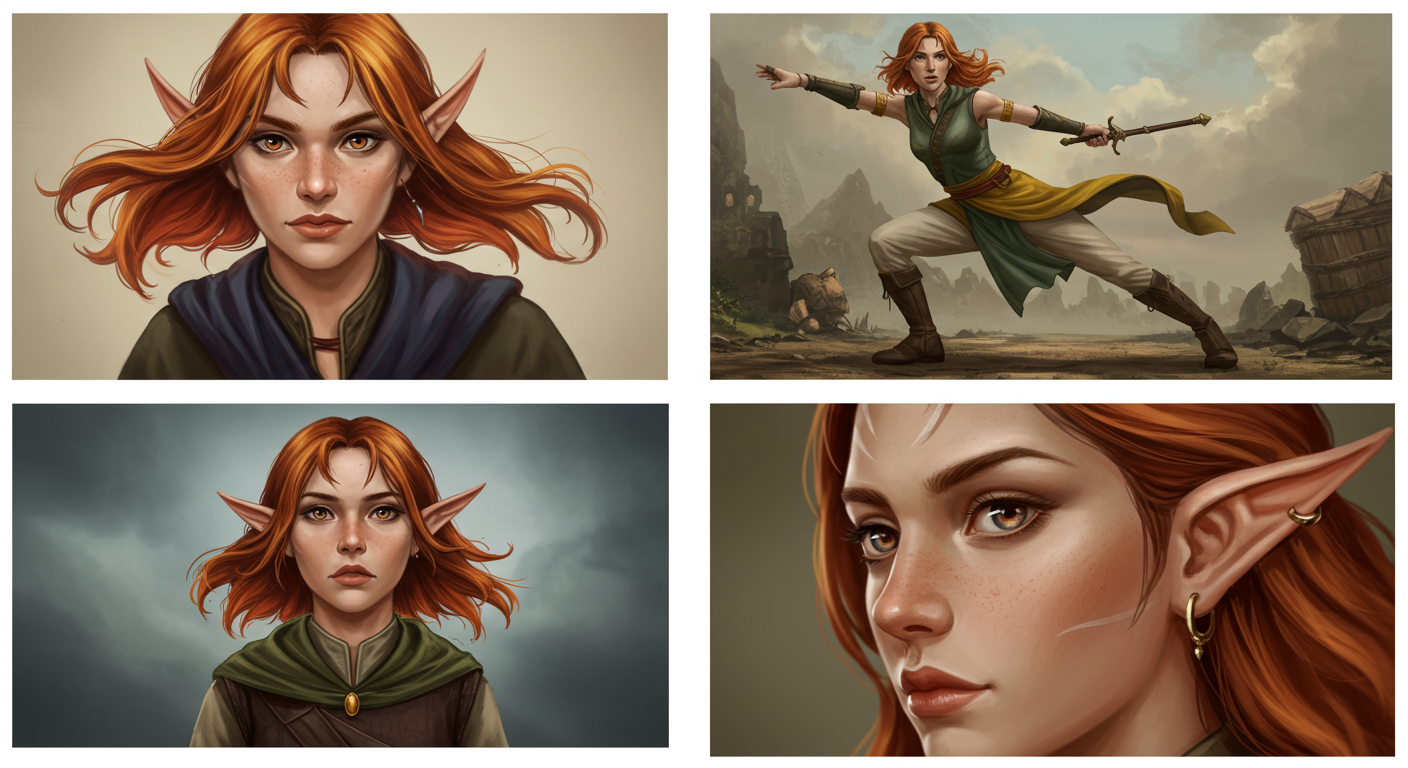

4. “Character Sheets” and Scene Setting

For trying different poses or outfits, I often create a series of prompts based on a “character sheet” idea:

- Portrait: (My seed prompt)

- Action Pose:

Elara, [core descriptors], dynamic action pose, leaping across rooftops, cyberpunk cityscape, [style] - Different Outfit:

Elara, [core descriptors], wearing a stealthy black jumpsuit, infiltrating a high-tech facility, [style] - Interacting with Environment/Props:

Elara, [core descriptors], piloting a small starfighter, cockpit view, nebula in background, [style]

The key is to change only one major aspect (pose, outfit, setting) at a time while keeping the core character description identical.

(Imagine a 2x2 grid showing “Elara” from the seed prompt, then Elara in an action pose, a different outfit, and maybe a close-up focusing on her eyes and earring.)

(Imagine a 2x2 grid showing “Elara” from the seed prompt, then Elara in an action pose, a different outfit, and maybe a close-up focusing on her eyes and earring.)

5. Advanced Techniques (A Glimpse)

While the above can get you quite far, for true consistency, especially for professional use or detailed storytelling, more advanced methods are often needed:

- Training Custom Models (LoRAs/Embeddings): This involves training a small, specialized model on images of your specific character (either real photos or a set of AI generations you’ve curated). This “teaches” the AI your character’s likeness much more deeply. This is a more involved process but offers the highest level of consistency. I’m currently exploring this for some of my key characters!

- ControlNet (Stable Diffusion): Using ControlNet with OpenPose (for poses), Canny edges (for outlines), or Depth maps can help maintain character structure and pose while changing other elements.

- Photobashing/Manual Editing: Sometimes, the best way to ensure consistency for a critical detail (like a specific scar or accessory) is to manually edit it in using Photoshop or GIMP, or to combine elements from different AI generations.

Embrace “Familial Resemblance” Over Identical Twins

It’s important to set realistic expectations. Unless you’re using advanced techniques like LoRA training, achieving a pixel-perfect identical character across dozens of images is extremely challenging. I’ve learned to aim for a “strong familial resemblance” – the character should be instantly recognizable, even if there are minor variations in facial structure or hairstyle from image to image. Think of it like different comic book artists drawing the same character; there are variations, but the core identity remains.

The Joy of Bringing Characters to Life

Despite the challenges, the process of coaxing a consistent character out of the AI ether is incredibly rewarding. It feels like a true collaboration between human intent and machine creativity. Each “successful” iteration, where the character looks right and fits the new scene, is a small victory.

This is an area of AI art generation that’s rapidly evolving, and new tools and techniques are emerging all the time. My workflow today might be different in six months! But the core principles of specific prompting, iteration, and a clear vision for your character will always be valuable.

What are your go-to methods for creating consistent AI characters? Have you found any particular tricks or tools that work well? I’d love to hear your experiences and tips in the comments!